type

status

date

slug

summary

tags

category

icon

password

目录

默认Pipline中的组件算法1.1. Camera Init1.2. Feature Extraction 特征提取1.3. Image Matching 图像匹配1.4. Feature Matching 特征匹配1.5. Structure From Motion 运动推断结构(稀疏重建)1.6. Prepare Dense Scene 稠密场景1.7. Depth Map 深度估计1.8. Depth Map Filter 深度图过滤1.9. Meshing 网格重建1.10. Mesh Filtering 网格修复1.11. Texturing 纹理重建

默认Pipline中的组件算法

参考AliceVision和meshroom程序自带说明文档

- 下图是Meshroom默认pipline,其中实线连接的参数是初始设定的值;虚线连接的参数是实现设定的值。

1.1. Camera Init

Input | Setting | Output | Description |

Viewpoints Input | ㅤ | ㅤ | viewpoints (1 Element for each loaded image) - ID - Pose ID - Image Path - Intrinsic: Internal Camera Parameters (Intrinsic ID) - Rig (-1 - 200) - Rig Sub-Pose: Rig Sub-Pose Parameters (-1 - 200) - Image Metadata: (list of metadata elements) 输入图片路径, 获得图片文件的信息 |

Intrinsic Camera Intrinsics | ㅤ | ㅤ | (1 Element for each loaded image) - ID - Initial Focal Length: Initial Guess on the Focal Length - Focal Length: Known/Calibrated Focal Length - Camera Type: pinhole’, ‘radial1’, ‘radial3’, ‘brown’, ‘fisheye4’ - #Make: Camera Make (not included in this build, commented out) - #Model: Camera Model - #Sensor Width: Camera Sensor Width - Width: Image - Width (0-10000) - Height: Image Height (0-10000) - Serial Number: Device Serial Number (camera and lens combined) - Principal Point: X (0-10000) Y(0-10000)- DistortionParams: Distortion Parameters - Locked(True/False): If the camera has been calibrated, the internal camera parameters (intrinsics) can be locked. It should improve robustness and speedup the reconstruction. 相机内置默认参数 |

Sensor Database | ㅤ | ㅤ | Camera sensor width database path 相机型号及其参数数据库 |

ㅤ | Default Field Of View | ㅤ | Empirical value for the field of view in degree 45° (0°-180°) 相机视野 |

ㅤ | Verbose Level | ㅤ | verbosity level (fatal, error, warning, info, debug, trace) 日志详细程度 |

ㅤ | ㅤ | SfMData File | …/cameraInit.sfm 输出SfM数据文件路径 |

导入图片。

1.2. Feature Extraction 特征提取

Input | Setting | Output | Description |

SfMData | ㅤ | SfMData | SfMData file. 输入SfM数据文件路径 |

ㅤ | ㅤ | Describer Types | Describer types used to describe an image. ‘sift’, ‘sift*float’, ‘sift*upright’, ‘akaze’, ‘akaze*liop’, ‘akaze*mldb’, ‘cctag3’, ‘cctag4’, ‘sift*ocv’, ‘akaze*ocv’ 设置特征检测算法 |

ㅤ | Describer Preset | ㅤ | Control the ImageDescriber configuration (low, medium, normal, high, ultra). Configuration “ultra” can take long time ! 设置特征精度? |

ㅤ | Force CPU Extraction | ㅤ | Use only CPU feature extraction. 强制使用CPU检测特征 |

ㅤ | Max Nb Threads | ㅤ | Specifies the maximum number of threads to run simultaneously (0 for automatic mode). (0-24) 0 |

ㅤ | ㅤ | ㅤ | verbosity level (fatal, error, warning, info, debug, trace). |

ㅤ | ㅤ | Features Folder | Output path for the features and descriptors files (*.feat, *.desc). 特征文件路径 |

这一步的目的是提取独特的像素组,这些像素组在某种程度上不受图像采集过程中相机视角变化的影响。因此,场景中的一个特征在所有图像中都应该有类似的特征描述。

常见特征检测方法大致三种(SIFT, AKAZE, CCTAG)

- 论文:常用特征检测方法调研。A survey of recent advances in visual feature detection, Yali Li, Shengjin Wang, Qi Tian, Xiaoqing Ding, 2015

- SIFT(Scale-invariant feature transform 尺度不变特征转换)的最初目标是在第一幅图像中提取可辨识的斑块(discriminative patches),这些斑块可以与第二幅图像的可辨识斑块进行比较,而不考虑旋转、平移和比例。由于相关的细节只存在于某一比例,所以提取的斑块是以稳定的兴趣点为中心。在某种程度上,可以利用SIFT的不变性来处理图像采集过程中视点变化时发生的图像变动。

- AKAZE(Accelerated-KAZE)可以成为在挑战性条件(如皮肤)的提取特征上表现良好

- 论文:AKAZE Fast explicit diffusion for accelerated features in nonlinear scale spaces, P.F. Alcantarilla, J. Nuevo, A. Bartoli, 2013

- 优点:能够比SIFT匹配更广的角度。

- 缺点:可能会提取很多特征,重新划分也不一定好。

- CCTag 是一种具有3或4个冠的标记类型。可以在拍摄过程中把标记放在场景中,以自动重新定位,并将场景的比例调整到已知的大小。它对运动模糊、景深、遮挡的影响很强。要注意在CCTags周围有足够的白边。

由于纹理复杂度的变化(从一幅图像到另一幅图像或图像的不同部分),提取的特征数量可能会有很大的变化,因此使用了一个后过滤步骤,将提取的特征数量控制在合理的范围内(例如每幅图像在1到1万之间)。我们使用网格过滤(Grid Filtering)来确保图像中的良好分区。

1.3. Image Matching 图像匹配

Input | Setting | Output | Description |

SfMData | ㅤ | SfMData | SfMData file |

Features Folders | ㅤ | Features Folders | Folder(s) containing the extracted features and descriptors 特征文件路径 |

ㅤ | Method | ㅤ | 选择产生图像描述符的算法(VocabularyTree; Sequential; SequentialAndVocabularyTree; Exhaustive; Frustum; FrustumOrVocabularyTree) |

Voc Tree: Tree | ㅤ | Input name for the vocabulary tree file ALICEVISION_VOCTREE 选择词汇树时,输入词汇树文件路径 | |

ㅤ | Voc Tree: Weights | ㅤ | Input name for the weight file, if not provided the weights will be computed on the database built with the provided set 选择词汇树时,输入词汇树权重路径,没有的话自动计算 |

ㅤ | Minimal Number of Images | ㅤ | Minimal number of images to use the vocabulary tree. If we have less features than this threshold, we will compute all matching combinations 设定词汇树用到的最小图片数 |

ㅤ | Max Descriptors | ㅤ | Limit the number of descriptors you load per image. Zero means no limit 设定一张图片中最大的描述符数量 |

ㅤ | Nb Matches | ㅤ | The number of matches to retrieve for each image (If 0 it will retrieve all the matches) 50 (0-1000) 设定每张图片的匹配数量? |

ㅤ | Verbose Level | ㅤ | verbosity level (fatal, error, warning, info, debug, trace) |

ㅤ | ㅤ | Image Pairs | Filepath to the output file with the list of selected image pairs 输出图片对列表文件路径 |

找到与场景中相同区域的图像。将图像简化为一个紧凑的图像描述符来计算所有图像描述符之间的距离。通过比较这些图像描述符来查看不同的图像是否具有相同的内容。

产生这种图像描述符的常用方法:

- 词汇树(Vocabulary Tree)使用图像检索技术来寻找共享某些内容的图像,而不需要付出解决所有特征匹配细节的代价。每张图像都用一个紧凑的图像描述符表示,这样可以非常有效地计算所有图像描述符之间的距离。

- 序列(Sequential) 如果输入是一个视频序列,可以使用这个选项在它们之间随着时间的推移连接图像。

- 序列+词汇树(SequentialAndVocabularyTree)可以实现不同时间的关键帧之间的连接。

- Exhaustive 输出所有图像对。

- Frustum 如果图像有已知的姿势,则计算摄像机之间的交点frustum,以创建图像对的列表

- FrustumOrVocabularyTree 如果图像有已知的姿势,使用frustum交集,否则使用VocabularuTree。

1.4. Feature Matching 特征匹配

Input | Setting | Output | Description |

SfMData | ㅤ | SfMData | SfMData file |

Features Folders | ㅤ | Features Folders | Folder(s) containing the extracted features and descriptors |

Image Pairs | ㅤ | ㅤ | Path to a file which contains the list of image pairs to match |

Describer Types | ㅤ | Describer Types | Describer types used to describe an image **sift**'/ 'sift_float'/ 'sift_upright'/ 'akaze'/ 'akaze_liop'/ 'akaze_mldb'/ 'cctag3'/ 'cctag4'/ 'sift_ocv'/ 'akaze_ocv |

ㅤ | Photometric Matching Method | ㅤ | For Scalar based regions descriptor ' * BRUTE_FORCE_L2: L2 BruteForce matching' ' * ANN_L2: L2 Approximate Nearest Neighbor matching ' * CASCADE_HASHING_L2: L2 Cascade Hashing matching ' * FAST_CASCADE_HASHING_L2: L2 Cascade Hashing with precomputed hashed regions (faster than CASCADE_HASHING_L2 but use more memory) 'For Binary based descriptor ' * BRUTE_FORCE_HAMMING: BruteForce Hamming matching' |

ㅤ | Geometric Estimator | ㅤ | Geometric estimator: (acransac: A-Contrario Ransac // loransac: LO-Ransac (only available for fundamental_matrix model) |

ㅤ | Geometric Filter Type | ㅤ | Geometric validation method to filter features matches: **fundamental_matrix** // essential_matrix // homography_matrix /// homography_growing // no_filtering' |

ㅤ | Distance Ratio | ㅤ | Distance ratio to discard non meaningful matches 0.8 (0.0 - 1) |

ㅤ | Max Iteration | ㅤ | Maximum number of iterations allowed in ransac step 2048 (1 - 20000) |

ㅤ | Max Matches | ㅤ | Maximum number of matches to keep (0 - 10000) |

ㅤ | Save Putative Matches | ㅤ | putative matches (True/False) 保存推定结果 |

ㅤ | Guided Matching | ㅤ | the found model to improve the pairwise correspondences (True/False) |

ㅤ | Export Debug Files | ㅤ | debug files (svg/ dot) (True/False) 是否输出调试文件 |

ㅤ | Verbose Level | ㅤ | verbosity level (fatal, error, warning, info, debug, trace) |

ㅤ | ㅤ | Matches Folder | Path to a folder in which computed matches will be stored 匹配文件的保存路径 |

这一步的目的是在候选图像对之间匹配所有特征。分两步进行:

- 测光匹配(Photometric Matches) 它在两幅输入图像的特征描述符集之间进行测光匹配。对于第一幅图像上的每个特征描述符,它在第二幅图像中寻找最接近的两个描述符,并在它们之间使用一个相对阈值。这个假设去除了重复结构的特征,但已被证明是一个稳健的标准。[Lowe2004]

- 在第二幅图像中为每个特征找到两个最接近的描述符,使用蛮力的方法计算密集,但存在许多优化的算法。最常见的是近似最近邻(Approximate Nearest Neighbor,ANN)算法,也有级联哈希算法(Cascading Hashing)。

- 几何过滤(Geometric Filtering) 它对光度匹配的候选人进行几何过滤。在离群检测框架RANSAC(RANdom SAmple Consensus)中,通过使用极点几何来进行几何过滤。它随机选择一小部分特征对应关系并计算fundamental or essential matrix,然后检查验证该模型的特征数量并通过RANSAC框架进行迭代。

1.5. Structure From Motion 运动推断结构(稀疏重建)

Input | Setting | Output | SfMData file |

Features Folder | ㅤ | ㅤ | Folder(s) containing the extracted features and descriptors. |

Matches Folders | ㅤ | ㅤ | Folder(s) in which computed matches are stored. |

Describer Types | ㅤ | ㅤ | Describer types used to describe an image. ‘sift’, ‘sift*float’, ‘sift*upright’, ‘akaze’, ‘akaze*liop’, ‘akaze*mldb’, ‘cctag3’, ‘cctag4’, **’siftocv’, ‘akazeocv’ |

ㅤ | Localizer Estimator | ㅤ | Estimator type used to localize cameras (acransac, ransac, lsmeds, loransac, maxconsensus). |

ㅤ | Observation Constraint | ㅤ | Observation contraint mode used in the optimization: Basic: Use standard reprojection error in pixel coordinates, Scale: Use reprojection error in pixel coordinates but relative to the feature scale |

ㅤ | Localizer Max Ransac Iterations | ㅤ | Maximum number of iterations allowed in ransac step. (1-20000) 4096 |

ㅤ | Localizer Max Ransac Error | ㅤ | Maximum error (in pixels) allowed for camera localization (resectioning). If set to 0, it will select a threshold according to the localizer estimator used (if ACRansac, it will analyze the input data to select the optimal value). (0.0-100-0) 0.0 |

ㅤ | Lock Scene Previously Reconstructed | ㅤ | This option is useful for SfM augmentation. Lock previously reconstructed poses and intrinsics. |

ㅤ | Local Bundle Adjustment | ㅤ | It reduces the reconstruction time, especially for large datasets (500+ images) by avoiding computation of the Bundle Adjustment on areas that are not changing. |

ㅤ | LocalBA Graph Distance | ㅤ | Graph-distance limit to define the Active region in the Local Bundle Adjustment strategy. (2-10) 1 |

ㅤ | Maximum Number of Matches | ㅤ | Maximum number of matches per image pair (and per feature type). This can be useful to have a quick reconstruction overview. 0 means no limit. (0-50000) 1 |

ㅤ | Minimum Number of Matches | ㅤ | Minimum number of matches per image pair (and per feature type). This can be useful to have a meaningful reconstruction with accurate keypoints. 0 means no limit. (0-50000) 1 |

ㅤ | Min Input Track Length | ㅤ | Minimum track length in input of SfM (2-10) |

ㅤ | Min Observation For Triangulation | ㅤ | Minimum number of observations to triangulate a point. Set it to 3 (or more) reduces drastically the noise in the point cloud, but the number of final poses is a little bit reduced (from 1.5% to 11% on the tested datasets). (2-10) |

ㅤ | Min Angle For Triangulation | ㅤ | Minimum angle for triangulation. (0.1-10) 3.0 |

ㅤ | Min Angle For Landmark | ㅤ | Minimum angle for landmark. (0.1-10) 2.0 |

ㅤ | Max Reprojection Error | ㅤ | Maximum reprojection error. (0.1-10) 4.0 |

ㅤ | Min Angle Initial Pair | ㅤ | Minimum angle for the initial pair. (0.1-10) 5.0 |

ㅤ | Max Angle Initial Pair | ㅤ | Maximum angle for the initial pair. (0.1-60) 40.0 |

ㅤ | Use Only Matches From Input Folder | ㅤ | Use only matches from the input matchesFolder parameter. Matches folders previously added to the SfMData file will be ignored. |

ㅤ | Use Rig Constraint | ㅤ | Enable/Disable rig constraint. |

ㅤ | Force Lock of All Intrinsic Camera Parameters. | ㅤ | Force to keep constant all the intrinsics parameters of the cameras (focal length, principal point, distortion if any) during the reconstruction. This may be helpful if the input cameras are already fully calibrated. |

ㅤ | Filter Track Forks | ㅤ | Enable/Disable the track forks removal. A track contains a fork when incoherent matches lead to multiple features in the same image for a single track. |

ㅤ | Initial Pair A | ㅤ | Filename of the first image (without path). |

ㅤ | Initial Pair B | ㅤ | Filename of the second image (without path). |

ㅤ | Inter File Extension | ㅤ | Extension of the intermediate file export. (‘.abc’, ‘.ply’) |

ㅤ | Verbose Level | ㅤ | Verbosity level (fatal, error, warning, info, debug, trace). |

ㅤ | ㅤ | Output SfMData File (SfMData ) | Path to the output sfmdata file (sfm.abc) |

ㅤ | ㅤ | Output SfMData File (Views and Poses) | Path to the output sfmdata file with cameras (views and poses). (cameras.sfm) |

ㅤ | ㅤ | Output Folder | Folder for intermediate reconstruction files and additional reconstruction information files. 保存运动推断结构所有文件的文件夹路径 |

将分析的特征进行匹配,以了解所有二维观测(2D observations)数据背后的几何关系,并通过所有摄像机的位姿(pose 位置和方向)和内部校准推断出刚性场景结构(三维点 3D points)。

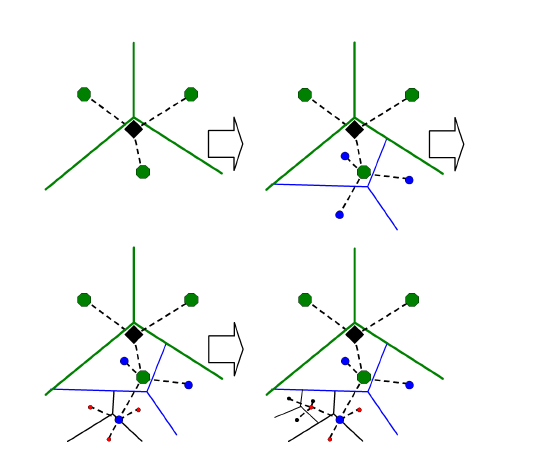

该管道是一个不断增长的重建过程(增量SfM,incremental SfM)

它首先计算一个初始的双视图重建(two-view reconstruction),通过添加新的视图来迭代扩展。

- Fuse 2-View Matches into Tracks 将双视角匹配的图像融合到轨迹中 将图像对之间的所有特征匹配融合为轨迹。每条轨迹代表空间中的一个候选点,从多个相机中可见。然而,在管道的这一步,它仍然包含许多离群值。

- Initial Image Pair 初始图像对 选择最佳的初始图像对。这一选择对于最终重建的质量至关重要。所以这个 图像对 应该最大限度地增加匹配的数量和每个图像中相应特征的再分配。(this image pair should maximize the number of matches and the repartition of the corresponding features in each image.)但同时,相机之间的角度也应该足够大,以提供可靠的几何信息。

- Initial 2-View Geometry 初始的双视角几何学 计算所选2幅图像之间的基本矩阵(fundamental matrix),并认为第一幅图像是坐标系的原点。

- Triangulate 三角测量 用两个第一台相机的位姿,将相应的2D特征三角化为3D点。

- Next Best View Selection 下一个最佳视图选择 选择所有与已经三维重建的特征有足够关联的图像。

- Estimate New Cameras 估计新相机 基于这些2D-3D关联,它对这些新相机中的每一个进行切除。切除 是 RANSAC框架 下的透视-点算法(Perspective-n-Point algorithm,PnP),用来找到验证大多数特征关联的相机位姿。在每个摄像机上,都要进行非线性最小化(non-linear minimization)来完善姿势。

- Triangulate 三角测量 从这些新的相机姿态中,一些轨迹会被2个或更多被切除的相机所看到,并对它们进行三角测量。

- Optimize 优化 执行捆绑调整以完善一切:所有摄像机的外在参数和内在参数,以及所有三维点的位置。通过删除所有具有高重投影误差(high reprojection error)或观测值之间角度不足的观测值来过滤捆绑的调整。

- Loop from 5 to 9 步骤5到9循环 由于我们对新的点进行了三角测量,就得到了更多的候选图像用于下一个最佳视图的选择,之后从步骤5到9反复进行迭代。增加摄像机,将新的二维特征三角化为三维点,并删除变得无效的三维点,直到无法定位新的视图。

位置估计器:

sfm重建:

1.6. Prepare Dense Scene 稠密场景

Input | Setting | Output | Description |

SfMData | ㅤ | SfMData | SfMData file |

ImagesFolders | ㅤ | ㅤ | Use images from specific folder(s). Filename should be the same or the image uid. |

ㅤ | Output File Type | ㅤ | Output file type for the undistorted images. (jpg, png, tif, exr) 输出不失真图片的格式 |

ㅤ | Save Metadata | ㅤ | Save projections and intrinsics information in images metadata (only for .exr images). 设定是否保存 |

ㅤ | Save Matrices Text Files | ㅤ | Save projections and intrinsics information in text files. 设定是否保存 |

ㅤ | Correct images exposure | ㅤ | Apply a correction on images Exposure Value 设定是否保存 |

ㅤ | Verbose Level | ㅤ | [‘fatal’, ‘error’, ‘warning’, ‘info’, ‘debug’, ‘trace’] |

ㅤ | ㅤ | Undistorted images | List of undistorted images. 保存不失真的图片的路径 |

ㅤ | ㅤ | Images Folder | MVS Configuration file (desc.Node.internalFolder + ‘mvs.ini) |

这个节点导出未变形的图像,这样深度图和纹理就可以在针孔图像(Pinhole images)上计算并且不会变形。

1.7. Depth Map 深度估计

Input | Setting | Output | Description |

SfMData | ㅤ | SfMData | SfMData file. |

Images Folder | ㅤ | Images Folder | Use images from a specific folder instead of those specify in the SfMData file. Filename should be the image uid. (Prepare Dense Scene输出的exr格式图片) |

ㅤ | Downscale | ㅤ | Image downscale factor (1, 2, 4, 8, 16) |

ㅤ | Min View Angle | ㅤ | Minimum angle between two views.(0.0 - 10.0) |

ㅤ | Max View Angle | ㅤ | Maximum angle between two views. (10.0 - 120.0) |

ㅤ | SGM: Nb Neighbour Cameras | ㅤ | Semi Global Matching: Number of neighbour cameras (1 - 100) |

ㅤ | SGM: WSH: Semi Global Matching | ㅤ | Half-size of the patch used to compute the similarity (1 - 20) |

ㅤ | SGM: GammaC | ㅤ | Semi Global Matching: GammaC Threshold (0 - 30) |

ㅤ | SGM: GammaP | ㅤ | Semi Global Matching: GammaP Threshold (0 - 30) |

ㅤ | Refine: Number of samples | ㅤ | (1 - 500) |

ㅤ | Refine: Number of Depths | ㅤ | (1 - 100) |

ㅤ | Refine: Number of Iterations | ㅤ | (1 - 500) |

ㅤ | Refine: Nb Neighbour Cameras | ㅤ | Refine: Number of neighbour cameras. (1 - 20) |

ㅤ | Refine: WSH | ㅤ | Refine: Half-size of the patch used to compute the similarity. (1 - 20) |

ㅤ | Refine: Sigma | ㅤ | Refine: Sigma Threshold (0 - 30) |

ㅤ | Refine: GammaC | ㅤ | Refine: GammaC Threshold. (0 - 30) |

ㅤ | Refine: GammaP | ㅤ | Refine: GammaP threshold. (0 - 30) |

ㅤ | Refine: Tc or Rc pixel size | ㅤ | Use minimum pixel size of neighbour cameras (Tc) or current camera pixel size (Rc) |

ㅤ | Verbose Level | ㅤ | verbosity level (fatal, error, warning, info, debug, trace) |

ㅤ | ㅤ | Output | Output folder for generated depth maps |

对所有被SfM解析过的相机,检索每个像素的深度值。

- Block Matching

- Semi-Global Matching (SGM) :Accurate and efficient stereo processing by semi-global matching and mutual information, H. Hirschmüller. CVPR 2005 ←Meshroom使用的方法

1.8. Depth Map Filter 深度图过滤

Input | Setting | Output | Description |

SfMData | ㅤ | SfMData | SfMData file |

Depth Map Folder | ㅤ | ㅤ | Input depth map folder |

ㅤ | Min View Angle | ㅤ | Minimum angle between two views |

ㅤ | Max View Angle | ㅤ | Maximum angle between two views |

ㅤ | Number of Nearest Cameras | ㅤ | Number of nearest cameras used for filtering 10 (0 - 20) |

ㅤ | Min Consistent Cameras | ㅤ | Min Number of Consistent Cameras 3 (0 - 10) |

ㅤ | Min Consistent Cameras Bad Similarity | ㅤ | Min Number of Consistent Cameras for pixels with weak similarity value 4 (0 - 10) |

ㅤ | Filtering Size in Pixels | ㅤ | Filtering size in Pixels (0 - 10) |

ㅤ | Filtering Size in Pixels Bad Similarity | ㅤ | Filtering size in pixels (0 - 10) |

ㅤ | Verbose Level | ㅤ | verbosity level (fatal, error, warning, info, debug, trace) |

ㅤ | ㅤ | Filtered DepthMaps Folder | Output folder for generated depth maps |

过滤多个深度图中不连贯的深度图值。这就可以在开始融合Meshing节点中的所有深度图之前,过滤掉不稳定的点。确保多个相机之间的一致性。根据相似度值和相干摄像机的数量选择一个折衷方案,以保持弱支持的表面而不增加伪影。

1.9. Meshing 网格重建

Input | Setting | Output | Description |

SfMData | ㅤ | ㅤ | SfMData file. |

Depth Maps Folder | ㅤ | ㅤ | Input depth maps folder |

ㅤ | Filtered Depth Maps Folder | ㅤ | Input filtered depth maps folder |

ㅤ | Estimate Space From SfM | ㅤ | Estimate the 3d space from the SfM |

ㅤ | Min Observations For SfM Space Estimation | ㅤ | Minimum number of observations for SfM space estimation. (0-100) 3 |

ㅤ | Min Observations Angle For SfM Space Estimation | ㅤ | Minimum angle between two observations for SfM space estimation. (0-120) 10 |

ㅤ | Max Input Points | ㅤ | Max input points loaded from depth map images (500**000** - 500000000) |

ㅤ | Max Points | ㅤ | Max points at the end of the depth maps fusion (100**000** - 10000000) |

ㅤ | Max Points Per Voxel | ㅤ | (500**000** – 30000000) |

ㅤ | Min Step | ㅤ | The step used to load depth values from depth maps is computed from maxInputPts. Here we define the minimal value for this step, so on small datasets we will not spend too much time at the beginning loading all depth values (1- 20) 2 |

ㅤ | Partitioning | ㅤ | (singleBlock, auto) |

ㅤ | Repartition | ㅤ | (multiResolution, regularGrid) |

ㅤ | angleFactor | ㅤ | (0.0-200.0) 15.0 |

ㅤ | simFactor | ㅤ | (0.0-200.0) 1.0 |

ㅤ | pixSizeMarginInitCoef | ㅤ | (0.0-10.0) 2.0 |

ㅤ | pixSizeMarginFinalCoef | ㅤ | (0.0-10.0) 4.0 |

ㅤ | voteMarginFactor | ㅤ | (0.1-10.0) 4.0 |

ㅤ | contributeMarginFactor | ㅤ | (0.0-10.0) 2.0 |

ㅤ | simGaussianSizeInit | ㅤ | (0.0-50) 10.0 |

ㅤ | simGaussianSize | ㅤ | (0.0-50) 0.1 |

ㅤ | minAngleThreshold | ㅤ | (0.0-10.0) 0.01 |

ㅤ | Refine Fuse | ㅤ | Refine depth map fusion with the new pixels size defined by angle and similarity scores. |

ㅤ | Add Landmarks To The Dense Point Cloud | ㅤ | Add SfM Landmarks to the dense point cloud. |

ㅤ | Colorize Output | ㅤ | Whether to colorize output dense point cloud and mesh. |

ㅤ | Save Raw Dense Point Cloud | ㅤ | Save dense point cloud before cut and filtering. |

ㅤ | Verbose Level | ㅤ | verbosity level (fatal, error, warning, info, debug, trace). |

ㅤ | ㅤ | Output Mesh | Output mesh (OBJ file format). mesh.obj |

ㅤ | ㅤ | Output Dense Point Cloud | Output dense point cloud with visibilities (SfMData file format). densePointCloud.abc |

创建一个密集的场景的几何表面表示。

3D Delaunay tetrahedralization之后,compute weights on cells and weights on facets connecting the cells。

1.10. Mesh Filtering 网格修复

Input | Setting | Output | Description |

Mesh | ㅤ | ㅤ | Input Mesh (OBJ file format) |

ㅤ | Keep Only the Largest Mesh | ㅤ | Keep only the largest connected triangles group (True/False) 只保留最大的模型,默认不使用(“Keep Only The Largest Mesh”. This is disabled by default in the 2019.1.0 release to avoid that the environment is being meshed, but not the object of interest. The largest Mesh is in some cases the reconstructed background. When the object of interest is not connected to the large background mesh it will be removed. You should place your object of interest on a well structured non transparent or reflecting surface (e.g. a newspaper).) |

ㅤ | Smoothing Subset | ㅤ | (all; surface_boundaries; surface_inner_part) |

ㅤ | Smoothing Boundaries Neighbours | ㅤ | Neighbours of the boundaries to consider 0 (0 - 20) |

ㅤ | Smoothing Iterations | ㅤ | 5 (0 - 50) |

ㅤ | Smoothing Lambda | ㅤ | 1 (0 - 10) |

ㅤ | Filtering Subset | ㅤ | (all; surface_boundaries; surface_inner_part) 筛选子集? |

ㅤ | Filtering Iterations | ㅤ | 1 (0 - 20) |

ㅤ | Filter Large Triangles Factor | ㅤ | Remove all large triangles. We consider a triangle as large if one edge is bigger than N times the average edge length. Put zero to disable it. 60 (1 - 100) |

ㅤ | Filter Triangles Ratio | ㅤ | Remove all triangles by ratio(largest edge/smallest edge). Put zero to disable it. 0 (0 - 50) |

ㅤ | Verbose Level | ㅤ | [‘fatal’, ‘error’, ‘warning’, ‘info’, ‘debug’, ‘trace’] |

ㅤ | ㅤ | Output mesh | Output mesh (OBJ file format) internalFolder + ‘mesh.obj |

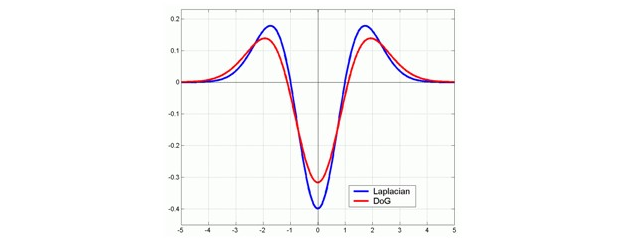

图形切割(Graph Cut)Max-Flow:优化切割体积

This cut represents the extracted mesh surface. 过滤表面上的不良单元。最后,对网格进行拉普拉斯滤波,以消除局部伪影。

1.11. Texturing 纹理重建

Input | Setting | Output | Description |

Images Folder | ㅤ | ㅤ | Use images from a specific folder instead of those specify in the SfMData file. Filename should be the image uid. |

Other Input Mesh (Mesh) | ㅤ | ㅤ | Optional input mesh to texture. By default, it will texture the result of the reconstruction. |

ㅤ | ㅤ | ||

Input Dense Reconstruction | ㅤ | ㅤ | Path to the dense reconstruction result (mesh with per vertex visibility) |

ㅤ | Texture Side | ㅤ | Output texture size 1024, 2048, 4096, 8192, 16384 |

ㅤ | Texture Downscale | ㅤ | Texture downscale factor1, 2, 4, 8 |

ㅤ | Texture File Type | ㅤ | Texture File Type ‘jpg’, ‘png’, ‘tiff’, ‘exr’ |

ㅤ | Unwrap Method | ㅤ | Method to unwrap input mesh if it does not have UV coordinates Basic (> 600k faces) fast and simple. Can generate multiple atlases LSCM (<= 600k faces): optimize space. Generates one atlas ABF (<= 300k faces): optimize space and stretch. Generates one atlas |

ㅤ | Use UDIM | ㅤ | Use UDIM UV mapping. True/False. |

ㅤ | Fill Holes | ㅤ | Fill Texture holes with plausible values True/False |

ㅤ | Padding | ㅤ | Texture edge padding size in pixel 5 (0-100) |

ㅤ | multiBandDownscale | ㅤ | Width of frequency bands for multiband blending 4 (0 - 8) |

ㅤ | multiBandNbContrib (MultiBand contributions) | ㅤ | Number of contributions per frequency band for multiband blending(each frequency band also contributes to lower bands) |

ㅤ | useScroe | ㅤ | Use triangles scores (ie. reprojection area) for multiband blending. True/False |

ㅤ | ㅤ | ||

ㅤ | Best Score Threshold | ㅤ | 0.0 to disable filtering based on threshold to relative best score 0.1 (0.0 - 1.0) |

ㅤ | Angle Hard Threshold | ㅤ | 0.0 to disable angle hard threshold filtering 90 (0.0 - 180.0) |

ㅤ | Process Colorspace | ㅤ | (sRGB; LAB; XYZ)Colorspace for the texturing internal computation (does not impact the output file colorspace) |

ㅤ | Correct Exposure | ㅤ | Uniformize images exposeure values True/False |

ㅤ | Force Visible By All Vertices | ㅤ | Triangle visibility is based on the union of vertices visiblity. True/False |

ㅤ | Flip Normals | ㅤ | Option to flip face normals. It can be needed as it depends on the vertices order in triangles and the convention change from one software to another. True/False |

ㅤ | Visibility Remapping Method | ㅤ | Method to remap visibilities from the reconstruction to the input mesh (Pull, Push, PullPush). |

ㅤ | Subdivision Target Ratio | ㅤ | Percentage of the density of the reconstruction as the target for the subdivision(0: disable subdivision, 0.5: half density of the reconstruction; 1: full density of the reconstruction) 0.8 (0.0 - 1.0) |

ㅤ | Verbose Level | ㅤ | verbosity level (fatal, error, warning, info, debug, trace). |

ㅤ | ㅤ | Output Folder | Folder for output mesh: OBJ, material and texture files. |

ㅤ | ㅤ | Output Mesh | Folder for output mesh: OBJ, material and texture files. internalFolder + ‘texturedMesh.obj |

ㅤ | ㅤ | Output Material | Folder for output mesh: OBJ, material and texture files. internalFolder + ‘texturedMesh.mtl |

ㅤ | ㅤ | Output Textures | Folder for output mesh: OBJ, material and texture files. internalFolder + ‘texture_*.png |

如果网格没有相关的UV,它会计算出自动的UV贴图。AliceVision实现了一个基本的UV映射方法来最小化纹理空间(a basic UV mapping approach to minimize the texture space)。标准的UV映射方法是由[Levy2002]提供的。

对于每个三角形,使用与每个顶点相关的可见性信息来检索纹理候选。

过滤掉与表面没有良好角度的相机,以支持正面平行的相机,最后对像素值进行平均。

由于使用[Burt1983]中描述的多波段混合法的一般化(a generalization of the multi-band blending described),所以在低频视野(views)中的平均比在高频中的平均要多。这种方法与[Baumberg2002]和[Allene2008]的精神是一致的。